Start the Countdown

Being a human is far easier than building a human.

Take something as simple as playing catch with a friend in the front yard. When you break down this activity into the discrete biological functions required to accomplish it, it's not simple at all. You need sensors, transmitters and effectors. You need to calculate how hard to throw based on the distance between you and your companion. You need to account for sun glare, wind speed and nearby distractions. You need to determine how firmly to grip the ball and when to squeeze the mitt during a catch. And you need to be able to process a number of what-if scenarios: What if the ball goes over my head? What if it rolls into the street? What if it crashes through my neighbor's window?

These questions demonstrate some of the most pressing challenges of robotics, and they set the stage for our countdown. We've compiled a list of the 10 hardest things to teach robots ordered roughly from "easiest" to "most difficult" -- 10 things we'll need to conquer if we're ever going to realize the promises made by Bradbury, Dick, Asimov, Clarke and all of the other storytellers who have imagined a world in which machines behave like people.

10.Blaze a Trail

Moving from point A to point B sounds so easy. We humans do it all day, every day. For a robot, though,navigation -- especially through a single environment that changes constantly or among environments it's never encountered before -- can be tricky business. First, the robot must be able to perceive its environment, and then it must be able to make sense of the incoming data.

Roboticists address the first issue by arming their machines with an array of sensors, scanners, cameras and other high-tech tools to assess their surroundings. Laser scanners have become increasingly popular, although they can't be used in aquatic environments because water tends to disrupt the light and dramatically reduces the sensor's range. Sonar technology offers a viable option in underwater robots, but in land-based applications, it's far less accurate. And, of course, a vision system consisting of a set of integrated stereoscopic cameras can help a robot to "see" its landscape.

Collecting data about the environment is only half the battle. The bigger challenge involves processing that data and using it to make decisions. Many researchers have their robots navigate by using a prespecified map or constructing a map on the fly. In robotics, this is known as SLAM -- simultaneous localization and mapping. Mapping describes how a robot converts information gathered with its sensors into a given representation. Localization describes how a robot positions itself relative to the map. In practice, these two processes must occur simultaneously, creating a chicken-and-egg conundrum that researchers have been able to overcome with more powerful computers and advanced algorithms that calculate position based on probabilities.

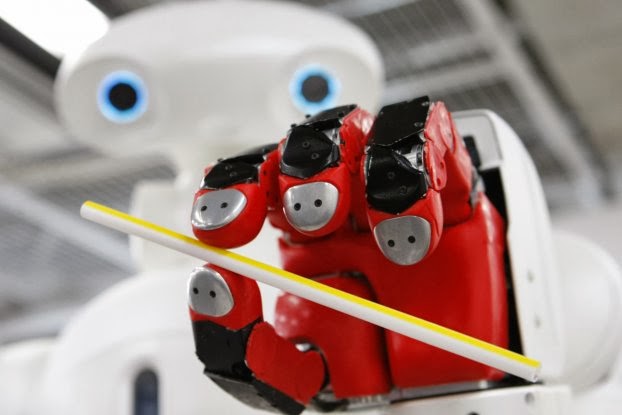

9.Exhibit Dexterity

Robots have been picking up parcels and parts in factories and warehouses for years. But they generally avoid humans in these situations, and they almost always work with consistently shaped objects in clutter-free environments. Life is far less structured for any robot that ventures beyond the factory floor. If such a machine ever hopes to work in homes or hospitals, it will need an advanced sense of touch capable of detecting nearby people and cherry-picking one item from an untidy collection of stuff.

These are difficult skills for a robot to learn. Traditionally, scientists avoided touch altogether, programming their machines to fail if they made contact with another object. But in the last five years or so, there have been significant advances in compliant designs and artificial skin. Compliance refers to a robot's level of flexibility. Highly flexible machines are more compliant; rigid machines are less so.

In 2013, Georgia Tech researchers built a robot arm with springs for joints, which enables the appendage to bend and interact with its environment more like a human arm. Next, they covered the whole thing in "skin" capable of sensing pressure or touch. Some robot skins contain interlocking hexagonal circuit boards, each carrying infrared sensors that can detect anything that comes closer than a centimeter. Others come equipped with electronic "fingerprints" -- raised and ridged surfaces that improve grip and facilitate signal processing.

Combine these high-tech arms with improved vision systems, and you get a robot that can offer a tender caress or reach into cabinets to select one item from a larger collection.

8.Hold a Conversation

Alan M. Turing, one of the founders of computer science, made a bold prediction in 1950: Machines would one day be able to speak so fluently that we wouldn't be able to tell them apart from humans. Alas, robots (even Siri) haven't lived up to Turing's expectations -- yet. That's because speech recognition is much different than natural language processing -- what our brains do to extract meaning from words and sentences during a conversation.

Initially, scientists thought it would be as simple as plugging the rules of grammar into a machine's memory banks. But hard-coding a grammatical primer for any given language has turned out to be impossible. Even providing rules around the meanings of individual words has made language learning a daunting task. Need an example? Think "new" and "knew" or "bank" (a place to put money) and "bank" (the side of a river). Turns out humans make sense of these linguistic idiosyncrasies by relying on mental capabilities developed over many, many years of evolution, and scientists haven't been able to break down these capabilities into discrete, identifiable rules.

As a result, many robots today base their language processing on statistics. Scientists feed them huge collections of text, known as a corpus, and then let their computers break down the longer text into chunks to find out which words often come together and in what order. This allows the robot to "learn" a language based on statistical analysis. For example, to a robot, the word "bat" accompanied by the word "fly" or "wing" refers to the flying mammal, whereas "bat" followed by "ball" or "glove" refers to the team sport.

7.Acquire New Skills

Let's say someone who's never played golf wants to learn how to swing a club. He could read a book about it and then try it, or he could watch a practiced golfer go through the proper motions, a faster and easier approach to learning the new behavior.

Roboticists face a similar dilemma when they try to build an autonomous machine capable of learning new skills. One approach, as with the golfing example, is to break down an activity into precise steps and then program the information into the robot's brain. This assumes that every aspect of the activity can be dissected, described and coded, which, as it turns out, isn't always easy to do. There are certain aspects of swinging a golf club, for example, that arguably can't be described, like the interplay of wrist and elbow. These subtle details can be communicated far more easily by showing rather than telling.

In recent years, researchers have had some success teaching robots to mimic a human operator. They call this imitation learning or learning from demonstration (LfD), and they pull it off by arming their machines with arrays of wide-angle and zoom cameras. This equipment enables the robot to "see" a human teacher acting out a specific process or activity. Learning algorithms then process this data to produce a mathematical function map that connects visual input into desired actions. Of course, robots in LfD scenarios must be able to ignore certain aspects of its teacher's behavior -- such as scratching an itch -- and deal with correspondence problems, which refers to ways that a robot's anatomy differs from a human's.

6.Practice Deception

The fine art of deception has evolved to help animals get a leg up on their competitors and avoid being eaten by predators. With practice, the skill can become a highly effective survival mechanism.

For robots, learning how to deceive a person or another robot has been challenging (and that might be just fine with you). Deception requires imagination -- the ability to form ideas or images of external objects not present to the senses -- which is something machines typically lack (see the next item on our list). They're great at processing direct input from sensors, cameras and scanners, but not so great at forming concepts that exist beyond all of that sensory data.

Future robots may be better versed at trickery though. Georgia Tech researchers have been able to transfer some deceptive skills of squirrels to robots in their lab. First, they studied the fuzzy rodents, which protect their caches of buried food by leading competitors to old, unused caches. Then they coded those behaviors into simple rules and loaded them into the brains of their robots. The machines were able to use thealgorithms to determine if deception might be useful in a given situation. If so, they were then able to provide a false communication that led a companion bot away from their hiding place.

5.Anticipate Human Actions

On "The Jetsons," Rosie the robot maid was able to hold conversations, cook meals, clean the house and cater to the needs and wants of George, Jane, Judy and Elroy. To understand Rosie's advanced development, consider this scene from the first episode of season one: Mr. Spacely, George's boss, comes to the Jetson house for dinner. After the meal, Mr. Spacely takes out a cigar and places it in his mouth, which prompts Rosie to rush over with a lighter. This simple action represents a complex human behavior -- the ability to anticipate what comes next based on what just happened.

Like deception, anticipating human action requires a robot to imagine a future state. It must be able to say, "If I observe a human doing x, then I can expect, based on previous experience, that she will likely follow it up with y." This has been a serious challenge in robotics, but humans are making progress. At Cornell University, a team has been working to develop an autonomous robot that can react based on how a companion interacts with objects in the environment. To accomplish this, the robot uses a pair of 3-D cameras to obtain an image of the surroundings. Next, an algorithm identifies the key objects in the room and isolates them from the background clutter. Then, using a wealth of information gathered from previous training sessions, the robot generates a set of likely anticipations based on the motion of the person and the objects she touches. The robot makes a best guess at what will happen next and acts accordingly.

The Cornell robots still guess wrong some of the time, but they're making steady progress, especially as camera technology improves.

4.Coordinate Activities With Another Robot

Building a single, large-scale machine -- an android, if you will -- requires significant investments of time, energy and money. Another approach involves deploying an army of smaller, simpler robots that then work together to accomplish more complex tasks.

This brings a different set of challenges. A robot working within a team must be able to position itself accurately in relation to teammates and must be able to communicate effectively -- with other machines and with human operators. To solve these problems, scientists have turned to the world of insects, which exhibitcomplex swarming behavior to find food and complete tasks that benefit the entire colony. For example, by studying ants, researchers know that individuals use pheromones to communicate with one another.

Robots can use this same "pheromone logic," although they rely on light, not chemicals, to communicate. It works like this: A group of tiny bots is dispersed in a confined area. At first, they explore the area randomly until an individual comes across a trace of light left by another bot. It knows to follow the trail and does so, leaving its own light trace as it goes. As the trail gets reinforced, more and more bots find it and join the wagon train. Some researchers have also found success using audible chirps. Sound can be used to make sure individual bots don't wander too far away or to attract teammates to an item of interest.

3.Make Copies of Itself

God told Adam and Eve, "Be fruitful and multiply, and replenish the earth." A robot that received the same command would feel either flummoxed or frustrated. Why? Because self-replication has proven elusive. It's one thing to build a robot -- it's another thing entirely to build a robot that can make copies of itself orregenerate lost or damaged components.

Interestingly, robots may not look to humans as reproductive role models. Perhaps you've noticed that we don't actually divide into two identical pieces. Simple animals, however, do this all of the time. Relatives ofjellyfish known as hydra practice a form of asexual reproduction known as budding: A small sac balloons outward from the body of the parent and then breaks off to become a new, genetically identical individual.

Scientists are working on robots that can carry out this basic cloning procedure. Many of these robots are built from repeating elements, usually cubes, that contain identical machinery and the program for self-replication. The cubes have magnets on their surfaces so they can attach to and detach from other cubes nearby. And each cube is divided into two pieces along a diagonal so each half can swivel independently. A complete robot, then, consists of several cubes arranged in a specific configuration. As long as a supply of cubes is available, a single robot can bend over, remove cubes from its "body" to seed a new machine and then pick up building blocks from the stash until two fully formed robots are standing side by side.

2.Act Based on Ethical Principle

As we interact with people throughout the day, we make hundreds of decisions. In each one, we weigh our choices against what's right and wrong, what's fair and unfair. If we want robots to behave like us, they'll need an understanding of ethics.

Like language, coding ethical behavior is an enormous challenge, mainly because a general set of universally accepted ethical principles doesn't exist. Different cultures have different rules of conduct and varying systems of laws. Even within cultures, regional differences can affect how people evaluate and measure their actions and the actions of those around them. Trying to write a globally relevant ethics manual robots could use as a learning tool would be virtually impossible.

With that said, researchers have recently been able to build ethical robots by limiting the scope of the problem. For example, a machine confined to a specific environment -- a kitchen, say, or a patient's room in an assisted living facility -- would have far fewer rules to learn and would have reasonable success making ethically sound decisions. To accomplish this, robot engineers enter information about choices considered ethical in selected cases into a machine-learning algorithm. The choices are based on three sliding-scale criteria: how much good an action would result in, how much harm it would prevent and a measure of fairness. The algorithm then outputs an ethical principle that can be used by the robot as it makes decisions. Using this type of artificial intelligence, your household robot of the future will be able to determine who in the family who should do the dishes and who gets to control the TV remote for the night.

1.Feel Emotions

"The best and most beautiful things in the world cannot be seen or even touched. They must be felt with the heart." If this observation by Helen Keller is true, then robots would be destined to miss out on the best and beautiful. After all, they're great at sensing the world around them, but they can't turn that sensory data into specific emotions. They can't see a loved one's smile and feel joy, or record a shadowy stranger's grimace and tremble with fear.

This, more than anything on our list, could be the thing that separates man from machine. How can you teach a robot to fall in love? How can you program frustration, disgust, amazement or pity? Is it even worth trying?

Some scientists think so. They believe that future robots will integrate both cognitive emotion systems, and that, as a result, they'll be able to function better, learn faster and interact more effectively with humans. Believe it or not, prototypes already exist that express a limited range of human emotion. Nao, a robot developed by a European research team, has the affective qualities of a 1-year-old child. It can show happiness, anger, fear and pride, all by combining postures with gestures. These display actions, derived from studies of chimpanzees and human infants, are programmed into Nao, but the robot decides which emotion to display based on its interaction with nearby people and objects. In the coming years, robots like Nao will likely work in a variety of settings -- hospitals, homes and schools -- in which they will be able to lend a helping hand and a sympathetic ear.

You guys make it really easy for all the folks out there. quality wordpress themes

ReplyDelete